Unveiled the veil of network processing

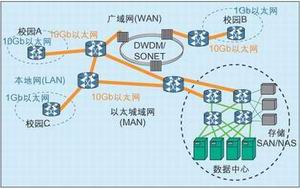

The most basic concept of network processing is the intelligent packet processing analysis, flow and implementation criteria for network packets based on predefined classifications and rules. It should be noted that the classification and rules must be customizable to meet the needs of different service providers. This article will focus on line cards. Each line card has data and control channels on the ingress and egress channels. The data channel is responsible for processing and transmitting packet data at line rate without significant delay, while the control channel is responsible for providing processing intelligence, enhancing rules, Handle unexpected situations and monitor statistics.

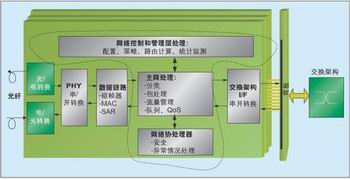

Figure 2 shows the core functions of the line card network processing. The three modules enclosed by a dashed line give the main network processing functions. Although network processing is limited to line cards, it is also enough to affect the overall system architecture. For example, some routers are dedicated to a separate set of line cards for specialized functions (such as security processing). Depending on the equipment required for network processing, the system may (or need not) need these line cards.

Choose the right semiconductor device

Network processing can use a variety of semiconductor solutions, but can be broadly classified as ASIC and programmable devices. So how do these two types of devices implement network system design? The main principles of ASICs and programmable devices used in network processing are no different from any other applications. From a high-end perspective, ASICs provide higher performance for fixed functions, but their flexibility is limited. Although the ASIC's NRE is high and the product's time to market is long, its high output is still cost-effective.

On the other hand, programmable solutions can provide higher system speeds, including complex functions (special and exception handling), flexibility, and the shortest time-to-market. Programmable devices do not require a tape-out cost and are slightly more expensive than the corresponding ASIC. Because programmable solutions are more flexible and scalable, they have a longer product life than ASICs, thereby reducing the overall system cost.

Despite the above distinction between ASICs and programmable solutions, there is currently no perfect solution that can integrate the advantages of both semiconductors and meet various needs. The final choice of device depends on the specific needs, first consider the programmable solution applied to network processing.

Programmable solution

There are two main types of programmable solutions: network processors (NPUs) and FPGAs, both of which are programmable. The NPU provides processor-centric (ie, software-centric) programmable features, while the FPGA provides hardware-centric programmable features. Designers will soon realize that the performance of a software-centric approach will be lower than a hardware-centric approach. It is important to understand the difference between network processing and network processors: Network processing is a function, and network processors are a class of programmable devices.

2. Implement the network processor

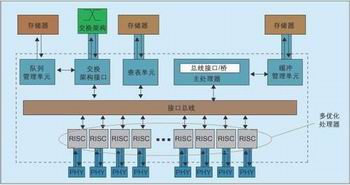

Network processors were originally used to design off-the-shelf devices in network devices that provide flexibility in all aspects while providing sufficient performance. Several large and small semiconductor companies participated in the competition and launched wire-speed network processors with extremely high flexibility and ultra-short time-to-market. Most NPUs feature an embedded RISC CPU optimized for multiple programming features and ASIC-like hardware circuits for general-purpose packet processing functions (Figure 3). Each RISC engine is optimized to perform specific tasks. This idea facilitates the handling of conventional Layer 2/Layer 3 functions in hardware circuits, while the RISC engine handles complex and special situations. Of course, any customization needed can be delegated to the RISC engine. NPUs usually use assembler/microcode, and sometimes use a custom C language for programmable features.

3. FPGA

FPGA is an ideal device for high-speed parallel processing of data, and it is extremely flexible and expandable. FPGA always solves the problems caused by NPU and follows the market by adding simple and practical network equipment. For example, Virtex-II Pro FPGAs include high-performance programmable architectures, embedded PowerPC processors, and 3.125Gbps transceivers, making them ideal for network processing. FPGAs are the best choice for OEMs to cross layer 2/layer 3 different transport streams and implement high-speed functions such as security coprocessors. By enhancing network characteristics, FPGAs provide high-performance data and control processing. However, unlike NPUs, FPGAs do not have built-in network processing capabilities and must be programmed for network processing. For NPUs, OEMs must develop assembly code (or some level of C code) to implement network processing functions; for FPGAs, OEMs must use hardware description language (HDL), cores with intellectual property, and C to implement data in the FPGA. Access and control pathways.

Currently, network processing cores and packet processing reference designs are suitable for FPGA platform design. In addition, the Virtex-II Pro FPGA supports all common parallel (single-ended and differential) and serial system interface standards that make it easy to interface with any protocol and to any device on the line card. How can a programmable solution solve a network processing problem?

First consider the specific network processing functions that can benefit enormously from programmable solutions (NPUs or FPGAs).

Deep packet processing

Although Layer 2 and Layer 3 processing are easy to implement in an ASIC, deeper packet processing is also required in Layers 4 and 5 in order to distinguish different priorities in a similar transport stream. Programmable solutions can handle these packets more deeply. Unlike NPU solutions that require multiple NPUs for deep packet processing, the FPGA solution requires only a single FPGA. This is because hardware parallel processing in FPGAs is completely comparable to RISC-based processing in NPUs. Multiple NPUs not only bring new challenges for hardware and software partitions but also increase the complexity of the software, but also increase system delay and power consumption. In general, if you use NPU or FPGA for deeper processing, you don't need an ASIC at all.

2. Software upgradeability

The main advantage of using a centralized processor design is software. Control layer software is a key value-added service for many OEMs and enables them to differentiate themselves from their competitors' businesses. Therefore, the code reuse function is crucial for the time to market of the product and support of the original product. C code is developed relatively quickly and can be easily upgraded/interfaced to new processors. DSP designers and architects have a deep understanding of this and use DSP processors when code reuse is more important than performance.

However, the NPU does not have code reuse because most of the NPU software is non-portable, that is, the software is proprietary assembly code or C code that has lost portability due to excessive customization. Assembly code is a processor-specific development tool and usually has a long development cycle, which increases the developer's development risk. In fact, the code that a company designs for NPU can't even be transplanted to the company's subsequent development NPU. The NPU industry is clearly aware of this and is therefore striving to standardize the industry so that designers can regain confidence. On the other hand, although FPGA has a strong software upgrade function, it will still face many difficulties when developing data layer processing using HDL or C code and developing control layer processing using C code. Using proprietary assembly code to modify features is more risky and difficult than using industry-standard HDL and C code. Some FPGA manufacturers provide tools based on platform and toolset approaches to enable seamless portability of software across generations of FPGAs.

3. Hardware upgradeability

The scalable nature of the hardware ensures a long product life and thus becomes a key feature of the programmable solution. In addition, scalability also helps network devices keep track of changing standards and protocols. Otherwise, the device will soon fall behind. The NPU only provides programmable features in the processor, and its ASIC-like custom hardware cannot be directly programmed. Therefore, the disadvantage of NPU in hardware upgrade is very similar to ASIC. FPGAs are field-programmable as their name suggests, so they can be easily upgraded to meet the changing needs.

4. Complex classification query

Businesses like VPNs (virtual private networks) and IPSec require sophisticated querying capabilities. Query and classification can be achieved through complex iterative algorithms, but the iterative algorithm suppresses the performance of the RISC engine in the NPU, and then affects the overall performance of the system. To this end, the NPU can adopt two strategies: (a) Increase the clock frequency of the NPU to obtain the headroom. (b) Add multiple NPUs to solve the problem. Too high clock frequency will cause signal integrity problems and increase the complexity of the motherboard, and multiple NPUs will cause problems similar to the above deep packet processing. The NPU's query requires a more costly memory subsystem, and the FPGA can implement the query in the state machine of the logic circuit, but this is not always effective. It may be indispensable to query coprocessors and SDRAM.

5. Bookkeeping

The billing method will change with the new business expanded by the operator. These billing methods vary from provider to provider and cannot be achieved with fixed functionality. Programmable solutions can quickly provide a billing architecture by maintaining and interpreting statistics, with the goal of minimizing the number of supported peripherals. Both NPU and FPGA provide the necessary billing flexibility.

6. Less devices

Assuming that a device (such as a router) has multiple line cards, fewer devices on the line card can bring cumulative benefits. There is always a balance between the number of devices and the desired performance, so stacking all devices in one device will destroy overall performance. For example, if the security processing function can be implemented on the main packet processing device, not only the number of devices can be reduced, but also benefit from increased performance. The NPU initially promised to be able to perform all functions with fewer devices but ultimately failed to achieve it. Network-based solutions require multiple dedicated coprocessors to meet performance requirements. What limits the FPGA is not performance but specification efficiency. Some applications that require querying and intensive control can be better implemented using coprocessor/embedded processors, so FPGA logic circuits are also suitable for high-speed data processing.

7. Product launch time

Time-to-market is one of the main drivers for the development of network-based programmable solutions. The NPU utilizes a processor-centric model to ensure a shorter time-to-market. However, assembly code development, data partitioning of multiple NPU system partitions, and coprocessors delays time to market. However, it must be pointed out that compared with ASICs, the time to market for such products has been greatly shortened. FPGA can not only shorten the development cycle, but also shorten the debugging cycle to accelerate the time to market. The biggest difference between the two is software: The NPU uses assembly code, while the FPGA uses HDL.

The NPU needs to maintain many functions, and the FPGA is the best choice for any programmable design. The use of network processing to solve this problem does not require the use of multiple coprocessors, because this is not the original intention of the original introduction of the NPU. The appropriate solution is to use the FPGA, NPU and one or two coprocessors selectively and rationally.

FPGA implementation of network processing examples

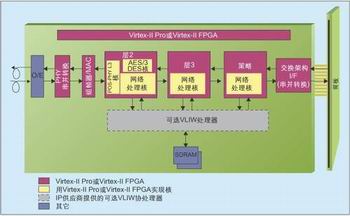

This article proposes a solution based on a new type of FPGA: Virtex-II Pro FPGA implements the data path and control functions, while the coprocessor is responsible for packet classification and query. The following describes the 2.5Gbps (OC-48) line card as an example.

1. Designing 2.5Gbps line cards using VPN and management rules The 2.5Gbps (OC-48) line rate is gaining increasing attention because OC-48 can effectively trade off bandwidth and cost. IPSec-encrypted VPNs are the high-priority services that service providers are looking for. In the next generation of line card development, router manufacturers always hope that the application system can have most or all of the following features (Figure 4): VPN supports multiple local routing tables and IPSec encryption/decryption; support for full-duplex SONET-based packet transmission Work OC-48; support MPLS-based VPN; thousands of VPN groups, multiple management rules/VPN groups; millions of prefixes; support DiffServ; use VPN group QoS level classification. Number of ToS fields and TCP/UDP source and destination ports. Thousands of QoS criteria can be established in each VPN group.

2. Option selection

Design choices are usually related to the number of rules required, performance (packets/cells processed per second), number of devices, and power consumption. This article focuses on classification and traffic management. To achieve these functions, the devices commonly used in line cards include:

(1) Network Processors and Coprocessors

Classification takes the most calculations. The NPU requires a lot of dedicated memory (such as an external ZBT SRAM) and consumes a lot of power. IPSec is usually used for the network security function in VPN. Therefore, it needs to support both control paths and data paths. Since the NP consumes all of its energy, a co-processor that can simultaneously perform IPSec security processing and traffic management is needed.

(2) FPGAs and Alternate Coprocessors

FPGAs ensure line rate IPSec security and provide faster classification and querying than NPUs. For IPSec, Virtex-II Pro implements the control path/password exchange in embedded PowerPC processor software and also implements the data path AES/3-DES encryption/decryption at the line rate of Gbps in the FPGA logic circuit. If the QoS rules need to change frequently, external VLIW coprocessors can implement classification (FSM-intensive) functions. The embedded PowerPC processor can be used to perform all control and management functions.

(3) Memory Subsystem

The CAM query subsystem provides a high query rate and supports a very large table structure. However, the power consumption of the CAM (which consumes 20 W per device) is large, and in order to support larger entries, a large number of CAM and SRAM devices are generally required. Expensive ZBT SRAMs provide higher speeds and support NPUs in Layer 2 and Layer 3 packet classification, but also require more power.

SDRAM is the most economical device and is therefore used in most systems. Low-power SDRAM achieves higher performance and larger entries through the use of pipelines and multi-threaded architectures. However, if the business is designed to compensate for performance only by increasing the processor speed, it is obviously not suitable. The combination of FPGAs, VLIW/RISC processors, and SDRAM provides the best solution for 2.5 Gbps line cards with VPN and IPSec.

Pvc Control Cable,Screen Control Cable,Steel Tape Armor Control Cable,Armor Control Cable

Baosheng Science&Technology Innovation Co.,Ltd , https://www.cablebaosheng.com