Big data is a massive, high-growth, and diverse information asset that requires new processing models to have greater decision making, insight and process optimization capabilities. The strategic significance of big data technology is not to master huge data information, but to specialize in these meaningful data. In other words, if big data is likened to an industry, the key to realizing profitability in this industry is to increase the “processing capability†of the data and realize the “value added†of the data through “processingâ€.

With the advent of the cloud era, Big Data has attracted more and more attention. The team of analysts at Yuntai believes that Big Data is often used to describe a large amount of unstructured data and semi-structured data created by a company that is spent downloading to a relational database for analysis. Excessive time and money. Big data analytics is often associated with cloud computing because real-time large dataset analysis requires a framework like MapReduce to distribute work to dozens, hundreds, or even thousands of computers.

Big data structureBig data includes structured, semi-structured, and unstructured data, and unstructured data is increasingly becoming a major part of the data. According to IDC's survey report, 80% of the data in the enterprise are unstructured data, which is exponentially increased by 60% every year. [7] Big data is just a representation or feature of the Internet's development to the present stage. There is no need for mythology or awe of it. Under the backdrop of technological innovation represented by cloud computing, these originally seem to look like Data that is difficult to collect and use is easy to use, and through the constant innovation of all walks of life, big data will gradually create more value for humans.

Secondly, if you want to systematically understand big data, you must decompose it comprehensively and meticulously, and proceed from three levels:

The first level is theory. Theory is the necessary way of cognition, and it is also the baseline that is widely recognized and disseminated. Here, from the definition of big data, understand the industry's overall depiction and characterization of big data; from the discussion of the value of big data to deeply analyze the preciousness of big data; understand the development trend of big data; from the big data privacy this is particularly important The perspective examines the long-term game between people and data.

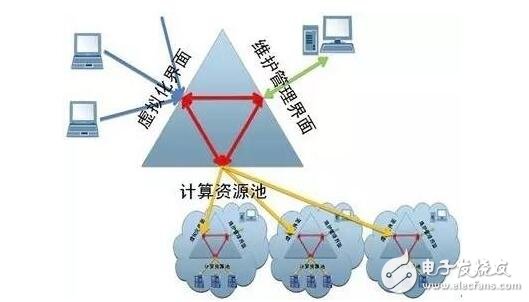

The second level is technology, which is the means of the value of big data and the cornerstone of progress. Here, the development of cloud computing, distributed processing technology, storage technology and sensing technology respectively illustrates the whole process of collecting, processing and storing big data.

The third level is practice, and practice is the ultimate value of big data. Here, from the big data of the Internet, the big data of the government, the big data of the enterprise and the big data of the individual, the big picture has already shown the beautiful scene and the blueprint to be realized.

Big data featuresCompared with traditional data warehouse applications, big data analysis has the characteristics of large data volume and complex query analysis. The article "Architectural Big Data: Challenges, Current Situations and Prospects" published in the Journal of Computers lists several important features that big data analysis platforms need to have. For the current mainstream implementation platforms - parallel database, MapReduce and a mixture based on the two The architecture is analyzed and summarized, and their respective advantages and disadvantages are pointed out. At the same time, the research status in various directions and the author's efforts in big data analysis are introduced, and the future research is prospected.

The four "V" of big data, or features have four levels: First, the amount of data is huge. From the TB level, to the PB level; second, the data types are numerous. The web logs, videos, pictures, geographic location information, etc. mentioned above. Third, the source of the data directly leads to the accuracy and authenticity of the analysis results. If the data source is complete and the actual final analysis results and decisions will be more accurate. Fourth, the processing speed is fast, the law of 1 second. This last point is also fundamentally different from traditional data mining techniques. The industry has classified it into four "V"s - Volume, Velocity, Variety, Veracity

To some extent, big data is the cutting-edge technology for data analysis. In short, the ability to quickly obtain valuable information from a wide variety of types of data is big data technology. It's important to understand this, and it is this that has made the technology a potential for many companies.

Ten core principles of big data technology 1. The core principle of data: from the core of "process" to the core of "data"In the era of big data, the computing model has also changed, from the core of “process†to the core of “dataâ€. The distributed computing framework of the Hadoop system is already a paradigm with "data" as the core. Unstructured data and analytics needs will change the way IT systems are upgraded: from simple incrementals to architectural changes. New thinking under big data - the shift in computing models.

Scientific advancement is increasingly driven by data, and massive data brings both opportunities and new challenges to data analysis. Big data is often obtained by using a variety of techniques and methods to synthesize information from multiple sources and at different times. In order to meet the challenges brought by big data, we need new statistical ideas and calculation methods.

2. According to the principle of value: having function is the transformation of value into data is valueWhat's really interesting about big data is that data is online, and this is precisely the characteristics of the Internet. For non-Internet products, the function must be its value. Today's Internet products, data must be its value.

Data can tell us how each customer's propensity to consume, what they want, what they like, what differences are in each person's needs, and which ones can be grouped together for classification. Big data is an increase in the amount of data, so that we can achieve the process from quantitative to qualitative.

All data samples are needed instead of sampling. What you don't know is more important than what you know, but if there is enough data now, it will make people visible and feel regular.

The data is so large and so many, so people feel that they have enough ability to grasp the future and make a judgment on the state of uncertainty. These things sound very primitive, but in fact the way of thinking behind them is very similar to the big data we are talking about today.

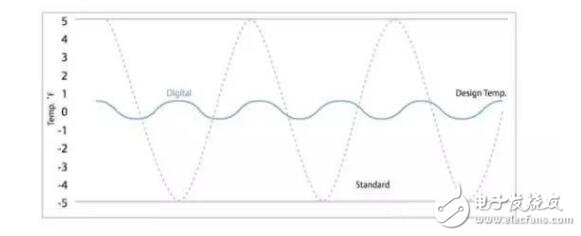

Focusing on efficiency rather than precision, big data marks a major step forward in the search for quantification and understanding of the world. Many things that were not measurable, stored, analyzed, and shared in the past have been digitized, with vast amounts of data and More inaccurate data opens a new door to our understanding of the world. Big data can increase productivity and sales efficiency because big data allows us to know the needs of the market and the needs of people. Big data makes companies' decisions more scientific, from focusing on accuracy to focusing on efficiency, and big data analytics can improve business efficiency.

Competition is the driving force of the enterprise, and efficiency is the life of the enterprise. The low efficiency and high efficiency are the key to measuring the success or failure of the enterprise. Generally speaking, the ratio of input to output is efficiency, and the pursuit of high efficiency means pursuing high value. The efficiency between manual, machine, automatic machine and intelligent machine is different. The intelligent machine is more efficient and can replace human thinking labor. The core of intelligent machines is big data braking, while big data braking is faster. In a fast-changing market, rapid forecasting, rapid decision making, rapid innovation, rapid customization, rapid production, and rapid time-to-market become the norms of corporate action. That is, speed is value, efficiency is value, and all this is inseparable from big data thinking. .

Twinkle System Technology Co Ltd , https://www.pickingbylight.com