In this article I will show you how to write a short (200 lines) Python script that automatically replaces the face of one image with the face of another.

This process is divided into four steps:

Detect face marks.

Rotate, zoom, pan, and the second image to match the first step.

Adjust the color balance of the second image to fit the first image.

Mix the characteristics of the second image in the first image.

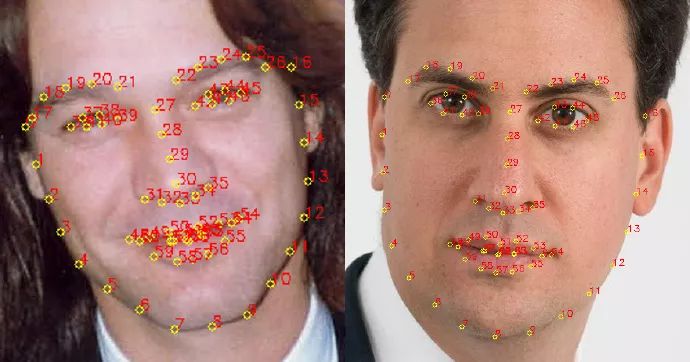

1. Use dlib to extract facial markers

The script uses dlib's Python binding to extract face tags:

Dlib implements the algorithm in Vahid Kazemi and Josephine Sullivan's paper "Using Regression Tree One Millisecond Face Alignment". The algorithm itself is very complicated, but the dlib interface is very simple to use:

PREDICTOR_PATH = "/home/matt/dlib-18.16/shape_predictor_68_face_landmarks.dat"

Detector = dlib.get_frontal_face_detector()

Predictor = dlib.shape_predictor(PREDICTOR_PATH)

Defget_landmarks(im):

Rects = detector(im,1)

Iflen(rects) >1:

raiseTooManyFaces

Iflen(rects) == 0:

raiseNoFaces

Returnnumpy.matrix([[px,py]forpinpredictor(im,rects[0]).parts()])

The get_landmarks() function converts an image into a numpy array and returns a 68×2 element matrix with each feature point of the input image corresponding to an x, y coordinate of each line.

The feature extractor requires a rough bounding box as the algorithm input, provided by a conventional face detector that returns a list of rectangles, each of which corresponds to a face in the image.

2. Analyze the face with Procrustes analysis

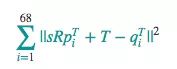

Now we have two marker matrices, each with a set of coordinates corresponding to a particular facial feature (eg the coordinates of line 30 correspond to the nose). We now have to figure out how to rotate, translate, and scale the first vector so that they fit as much as possible to the point of the second vector. One idea is to cover the second image on the first image with the same transform.

Mathematicalize this problem and look for T, s and R to make the following expression:

The result is minimal, where R is a 2 × 2 orthogonal matrix, s is a scalar, T is a two-dimensional vector, and pi and qi are the rows of the above-mentioned marker matrix.

It turns out that such problems can be solved with the "conventional Procrustes analysis":

Deftransformation_from_points(points1,points2):

Points1 = points1.astype(numpy.float64)

Points2 = points2.astype(numpy.float64)

C1 = numpy.mean(points1, axis=0)

C2 = numpy.mean(points2, axis=0)

Points1 -= c1

Points2 -= c2

S1 = numpy.std(points1)

S2 = numpy.std(points2)

Points1 /= s1

Points2 /= s2

U,S,Vt = numpy.linalg.svd(points1.T * points2)

R = (U * Vt).T

Returnnumpy.vstack([numpy.hstack(((s2 / s1) * R,

c2.T - (s2 / s1) * R * c1.T)),

Numpy.matrix([0.,0.,1.])])

The code implements these steps:

1. Convert the input matrix to a floating point number. This is the basis for subsequent operations.

2. Decrease its centroid for each point set. Once an optimal scaling and rotation method is found for the point set, the two centroids c1 and c2 can be used to find the complete solution.

3. Again, each point set is divided by its standard deviation. This will eliminate the problem of component scaling deviations.

4. Calculate the rotated portion using singular value decomposition. Details on solving orthogonal Procrustes problems can be found on Wikipedia.

5. Use the affine transformation matrix to return the complete transformation.

The result can be inserted into OpenCV's cv2.warpAffine function to map image two to image one:

Defwarp_im(im,M,dshape):

Output_im = numpy.zeros(dshape,dtype=im.dtype)

cv2.warpAffine(im,

M[:2],

(dshape[1], dshape[0]),

Dst=output_im,

borderMode=cv2.BORDER_TRANSPARENT,

Flags=cv2.WARP_INVERSE_MAP)

Returnoutput_im

The alignment results are as follows:

3. Correct the color of the second image

If we try to directly cover facial features, we will see this problem soon:

The problem is that the different skin tones and light between the two images cause the edges of the coverage area to be discontinuous. We tried to fix:

COLOUR_CORRECT_BLUR_FRAC = 0.6

LEFT_EYE_POINTS = list(range(42,48))

RIGHT_EYE_POINTS = list(range(36,42))

Defcorrect_colours(im1,im2,landmarks1):

Blur_amount = COLOUR_CORRECT_BLUR_FRAC * numpy.linalg.norm(

Numpy.mean(landmarks1[LEFT_EYE_POINTS], axis=0) -

Numpy.mean(landmarks1[RIGHT_EYE_POINTS], axis=0))

Blur_amount = int(blur_amount)

Ifblur_amount % 2 == 0:

Blur_amount += 1

Im1_blur = cv2.GaussianBlur(im1,(blur_amount,blur_amount),0)

Im2_blur = cv2.GaussianBlur(im2,(blur_amount,blur_amount),0)

# Avoid divide-by-zero errors.

Im2_blur += 128 * (im2_blur <= 1.0)

Return(im2.astype(numpy.float64) * im1_blur.astype(numpy.float64) /

Im2_blur.astype(numpy.float64))

The results are as follows:

This function tries to change the color of im2 to fit im1. It divides the Gaussian blur value of im2 by im2 and multiplies it by the Gaussian blur value of im1. The idea here is to use RGB to scale the color, but not to use the overall constant scale factor for all images, each pixel has its own local scale factor.

In this way, the difference in light between the two images can only be corrected to some extent. For example, if image 1 is illuminated from one side, but image 2 is uniformly illuminated, image 2 after color correction may also have a problem that the unlit side is darker.

In other words, this is a rather rudimentary approach, and the key to solving the problem is an appropriate Gaussian kernel function size. If it is too small, the facial features of the first image will be displayed in the second image. Too large, pixels outside the kernel are covered and discolored. The kernel here uses a pupil distance of 0.6 *.

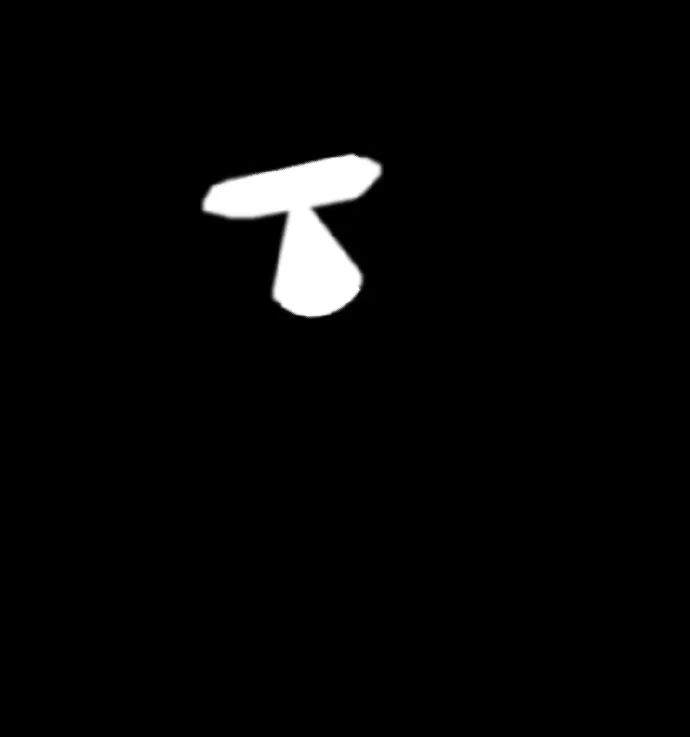

4. Mix the features of the second image in the first image

Use a mask to select which parts of Image 2 and Image 1 should be the final displayed image:

The value of 1 (displayed in white) is the area that image 2 should display, and the value of 0 (displayed in black) is the area that image 1 should display. The value between 0 and 1 is a mixed area of ​​image 1 and image 2.

This is the code that generated the above image:

LEFT_EYE_POINTS = list(range(42,48))

RIGHT_EYE_POINTS = list(range(36,42))

LEFT_BROW_POINTS = list(range(22,27))

RIGHT_BROW_POINTS = list(range(17,22))

NOSE_POINTS = list(range(27,35))

MOUTH_POINTS = list(range(48,61))

OVERLAY_POINTS = [

LEFT_EYE_POINTS + RIGHT_EYE_POINTS + LEFT_BROW_POINTS + RIGHT_BROW_POINTS,

NOSE_POINTS + MOUTH_POINTS,

]

FEATHER_AMOUNT = 11

Defdraw_convex_hull(im,points,color):

Points = cv2.convexHull(points)

cv2.fillConvexPoly(im,points,color=color)

Defget_face_mask(im,landmarks):

Im = numpy.zeros(im.shape[:2],dtype=numpy.float64)

Forgroup inOVERLAY_POINTS:

Draw_convex_hull(im,

Landmarks[group],

Color=1)

Im = numpy.array([im,im,im]).transpose((1,2,0))

Im = (cv2.GaussianBlur(im,(FEATHER_AMOUNT,FEATHER_AMOUNT),0) >0) * 1.0

Im = cv2.GaussianBlur(im,(FEATHER_AMOUNT,FEATHER_AMOUNT),0)

Returnim

Mask = get_face_mask(im2,landmarks2)

Warped_mask = warp_im(mask,M,im1.shape)

Combined_mask = numpy.max([get_face_mask(im1,landmarks1),warped_mask],

Axis=0)

We break down the above process:

The definition of get_face_mask() is to create a mask for an image and a marker matrix. It draws two white convex polygons: one is the area around the eye and the other is the area around the nose and mouth. It then ejects from 11 pixels to the outside of the edge of the mask, helping to hide any discontinuous areas.

Such a mask is generated for both images at the same time, and the mask of image 2 can be converted into the coordinate space of image 1 using the same conversion as in step 2.

Then, through an element-wise maximum, the two masks are combined into one. The two masks are combined to ensure that the image 1 is masked and the characteristics of the image 2 are revealed.

Finally, use the mask to get the final image:

Output_im = im1 * (1.0 - combined_mask) + warped_corrected_im2 * combined_mask

Complete code (link):

Importcv2

Importdlib

Importnumpy

Importsys

PREDICTOR_PATH = "/home/matt/dlib-18.16/shape_predictor_68_face_landmarks.dat"

SCALE_FACTOR = 1

FEATHER_AMOUNT = 11

FACE_POINTS = list(range(17,68))

MOUTH_POINTS = list(range(48,61))

RIGHT_BROW_POINTS = list(range(17,22))

LEFT_BROW_POINTS = list(range(22,27))

RIGHT_EYE_POINTS = list(range(36,42))

LEFT_EYE_POINTS = list(range(42,48))

NOSE_POINTS = list(range(27,35))

JAW_POINTS = list(range(0,17))

# Points used to line up the images.

ALIGN_POINTS = (LEFT_BROW_POINTS + RIGHT_EYE_POINTS + LEFT_EYE_POINTS +

RIGHT_BROW_POINTS + NOSE_POINTS + MOUTH_POINTS)

# Points from the second image to overlay on the first. The convex hull of each

# element will be overlaid.

OVERLAY_POINTS = [

LEFT_EYE_POINTS + RIGHT_EYE_POINTS + LEFT_BROW_POINTS + RIGHT_BROW_POINTS,

NOSE_POINTS + MOUTH_POINTS,

]

# Amount of blur to use during colour correction, as a fraction of the

# pupillary distance.

COLOUR_CORRECT_BLUR_FRAC = 0.6

Detector = dlib.get_frontal_face_detector()

Predictor = dlib.shape_predictor(PREDICTOR_PATH)

classTooManyFaces(Exception):

Pass

classNoFaces(Exception):

Pass

Defget_landmarks(im):

Rects = detector(im,1)

Iflen(rects) > 1:

raiseTooManyFaces

Iflen(rects) == 0:

raiseNoFaces

Returnnumpy.matrix([[px,py]forpinpredictor(im,rects[0]).parts()])

Defannotate_landmarks(im,landmarks):

Im = im.copy()

Foridx, point inenumerate(landmarks):

Pos = (point[0,0],point[0,1])

cv2.putText(im,str(idx),pos,

fontFace=cv2.FONT_HERSHEY_SCRIPT_SIMPLEX,

fontScale=0.4,

Color=(0,0,255))

Cv2.circle(im,pos,3,color=(0,255,255))

Returnim

Defdraw_convex_hull(im,points,color):

Points = cv2.convexHull(points)

cv2.fillConvexPoly(im,points,color=color)

Defget_face_mask(im,landmarks):

Im = numpy.zeros(im.shape[:2],dtype=numpy.float64)

Forgroup inOVERLAY_POINTS:

Draw_convex_hull(im,

Landmarks[group],

Color=1)

Im = numpy.array([im,im,im]).transpose((1,2,0))

Im = (cv2.GaussianBlur(im,(FEATHER_AMOUNT,FEATHER_AMOUNT),0) > 0) * 1.0

Im = cv2.GaussianBlur(im,(FEATHER_AMOUNT,FEATHER_AMOUNT),0)

Returnim

Deftransformation_from_points(points1,points2):

"""

Return an affine transformation [s * R | T] such that:

Sum ||s*R*p1,i + T - p2,i||^2

Is minimized.

"""

# Solve the procrustes problem by subtracting centroids, scaling by the

# standard deviation, and then using the SVD to calculate the rotation. See

# the following for more details:

# https://en.wikipedia.org/wiki/Orthogonal_Procrustes_problem

Points1 = points1.astype(numpy.float64)

Points2 = points2.astype(numpy.float64)

C1 = numpy.mean(points1, axis=0)

C2 = numpy.mean(points2, axis=0)

Points1 -= c1

Points2 -= c2

S1 = numpy.std(points1)

S2 = numpy.std(points2)

Points1 /= s1

Points2 /= s2

U,S,Vt = numpy.linalg.svd(points1.T * points2)

# The R we seek is in fact the transpose of the one given by U * Vt. This

# is because the above formulation assumes the matrix goes on the right

# (with row vectors) where as our solution requires the matrix to be on the

# left (with column vectors).

R = (U * Vt).T

Returnnumpy.vstack([numpy.hstack(((s2 / s1) * R,

c2.T - (s2 / s1) * R * c1.T)),

Numpy.matrix([0.,0.,1.])])

Defread_im_and_landmarks(fname):

Im = cv2.imread(fname,cv2.IMREAD_COLOR)

Im = cv2.resize(im,(im.shape[1] * SCALE_FACTOR,

Im.shape[0] * SCALE_FACTOR))

s = get_landmarks(im)

Returnim,s

Defwarp_im(im,M,dshape):

Output_im = numpy.zeros(dshape,dtype=im.dtype)

cv2.warpAffine(im,

M[:2],

(dshape[1], dshape[0]),

Dst=output_im,

borderMode=cv2.BORDER_TRANSPARENT,

Flags=cv2.WARP_INVERSE_MAP)

Returnoutput_im

Defcorrect_colours(im1,im2,landmarks1):

Blur_amount = COLOUR_CORRECT_BLUR_FRAC * numpy.linalg.norm(

Numpy.mean(landmarks1[LEFT_EYE_POINTS], axis=0) -

Numpy.mean(landmarks1[RIGHT_EYE_POINTS], axis=0))

Blur_amount = int(blur_amount)

Ifblur_amount % 2 == 0:

Blur_amount += 1

Im1_blur = cv2.GaussianBlur(im1,(blur_amount,blur_amount),0)

Im2_blur = cv2.GaussianBlur(im2,(blur_amount,blur_amount),0)

# Avoid divide-by-zero errors.

Im2_blur += 128 * (im2_blur <= 1.0)

Return(im2.astype(numpy.float64) * im1_blur.astype(numpy.float64) /

Im2_blur.astype(numpy.float64))

Im1,landmarks1 = read_im_and_landmarks(sys.argv[1])

Im2,landmarks2 = read_im_and_landmarks(sys.argv[2])

M = transformation_from_points(landmarks1[ALIGN_POINTS],

Landmarks2[ALIGN_POINTS])

Mask = get_face_mask(im2,landmarks2)

Warped_mask = warp_im(mask,M,im1.shape)

Combined_mask = numpy.max([get_face_mask(im1,landmarks1),warped_mask],

Axis=0)

Warped_im2 = warp_im(im2,M,im1.shape)

Warped_corrected_im2 = correct_colours(im1,warped_im2,landmarks1)

Output_im = im1 * (1.0 - combined_mask) + warped_corrected_im2 * combined_mask

Cv2.imwrite('output.jpg',output_im)

Molded Waterproofing Cable Assemblies

We specialize in waterproofing products overmolding. We can custom build, custom mold, and over-mold your cable designs.

We specialize in molded cable manufacturing for the widest diversity of

cable and connector types, across the whole spectrum of industries. Rich expeirence in developing and proposing solution Special for IP67, IP68 waterproofing products.

Molded waterproofing cable assemblies, waterproof wire harness, waterproofing cables overmolding

ETOP WIREHARNESS LIMITED , https://www.etopwireharness.com