Eye tracking is such a technology that makes robots more human.

Eye tracking is mainly to study the acquisition, modeling and simulation of eye movement information. First, tracking is performed according to changes in the characteristics of the eyeball and the periphery of the eyeball. Second, tracking is performed according to the change of the iris angle. Third, the beam such as infrared rays is actively projected to the iris to extract features.

As early as 2012, eye control technology research technology company Tobii announced the development of an eye-guided Gaze interface for Windows 8. This is a Swedish company that has an early eye tracking technology. In 2007, the company's valuation was 14 million US dollars, and in 2009 it reached 26.8 million US dollars. In 2012, Intel injected $21 million into Tobii, also for an investment in the Windows 8 operating system. In 2014, Tobii launched the second generation of smart glasses, Glass 2, which uses 2 cameras per lens and corresponding software technology to achieve accurate capture of eye movements.

Tobii smart glasses

Although Intel acquired the eye tracking company very early, after many years, eye tracking has not yet become the core interaction of laptops. The development of VR has allowed us to see more possibilities for the application of eye tracking technology.

Eye tracking solves three major problems of VR/AROculus founder Palmer Luckey has said that eye tracking technology will become an "important part" of VR technology in the future. Not only can gaze point rendering be implemented, it can also be used to create a visual depth to create a better user interface.

(1) reduce vertigo

VR needs to bring people immersion, it must be able to let people move naturally in the virtual space, which needs to change the scene by motion tracking. Current VR devices mainly use spatial positioning technology to capture the positional motion of the human body, and use inertial sensors to capture the movement of the person's head. When the person turns the head, the angle of view changes accordingly.

But this is just a relatively primitive motion capture. Most people change their vision most of the time by turning the eyeball instead of the head. People are used to turning with the eyeball (rather than turning the head).

The current VR only tracks the head and does not track the eye movements and is easily dizzy (MoTIon Sickness). Humans are extremely sensitive to changes in head rotation and corresponding field of view. If the user's head is rotated, and the relative field of view is delayed, it can be felt with a small delay. How small is it? Studies have shown that the head movement and field of view delay can not exceed 20ms, otherwise it will be very obvious. The eye tracking technology is used here to solve this problem, and to control the changes of the scene with changes in the eyeball.

(2) gaze point rendering

Changes to the scene are necessary, but the amount of information and computation required to make VR present the same scene and sense of space as the natural world is enormous. Most of the current VRs present all scenes with equal definition. When the user moves the head and body, the virtual objects will change positions accordingly. The zooming in and out will affect the depth of field. These changes require a lot of calculations. And keep updating.

The current VR can achieve 36 refreshes per second, and the speed of the human eye scene conversion needs 2000-3000 times per second. To render these scenes in real time, even the highly equipped computers can not reach.

In fact, VR does not need or should present all objects in the scene with full clarity. In daily life, we all understand “near big and smallâ€, and when we look at an object, the object becomes clear and the other objects become blurred, so that we can see the object with a sense of space and depth of field. If our eyes are shifted and the objects in the picture are still clear, then our eyes will feel "incompatible" and will fatigue because of too much information.

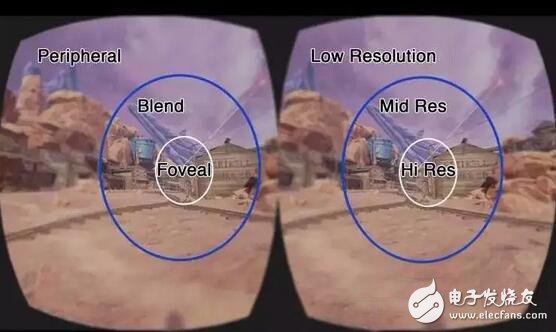

Gaze point rendering effect

The gaze point rendering can solve the problem of picture presentation in VR. By tracking the gaze point of the eye, the computer can only clearly render the scene of the gaze point, and blur the surrounding scene. In this way, our eyes will see the same in the virtual environment and the experience of seeing objects in the natural scene will be the same, so there will be no obvious obstacles in the switching between virtual and reality, and the dizziness caused by the viewpoint and movement will be alleviated.

Oculus chief scientist Michale Abrash said when talking about VR trends, VR will solve the focused depth of field problem within 5 years. Viewpoint rendering technology and eye tracking technology are key solutions. As mentioned in the previous article, NVIDIA and Qixin Yiwei will cooperate to implement GPU-based eye-tracking-based optimistic rendering technology from the underlying technology.

(3) eyeball triggered interactive interface

The interaction in VR is still an area that needs to be explored. Users have become accustomed to the 2D flat interface information presentation, and the information lost state will appear when entering VR. In many experiments, it was found that the user does not get the entire environment information by turning the head, and it is difficult to find hidden menus and options by himself, usually requiring the guidance of the staff or the guidance of the game voice.

Eye-control VR will bring a new gameplay to VR. In the shooting game, the cursor can be locked by the eye. When the user closes one eye, the scope is automatically called, and the player can also gaze at the game scene. Different triggering different plots, when the player looks at the intersection, the map navigation will automatically appear, when watching different parts of the NPC trigger different plots. In addition, the interactive mode of eye control can also be applied to the game menu. The menus that are not needed are automatically hidden, and the VR interface is made cleaner by eye control triggering during use.

Of course, the way of interaction in virtual reality remains to be explored. Whether it is the gesture interaction advocated by Leap MoTIon or the technical point interaction of eyeball control, it must conform to people's interaction habits.

What is the next step in eye tracking?

Although the major players have acquired and invested in eye tracking technology companies, eye tracking technology has played an important role in solving vertigo, immersion and interaction. VR tracking technology has become the development of AR/VR. Key and basic technologies.

A26 Vape Cigarette,Replaceable Atomizer Vape Charger,Vapes Desechables,Replaceable Atomizer Electronic Cigarette

Lensen Electronics Co., Ltd , https://www.lensenvape.com