Recently, in the completion of the project, a voice recognition function is used, the main purpose is to convert the words spoken by the user into words, and then do other processing. I found a number of third-party SDKs for speech recognition, such as Baidu speech recognition, WeChat speech recognition, and Keda Xunfei speech recognition. It is easier to find the University of Science and Technology. I made a demo program with detailed comments and sorted it out here.

(1) Preparation work

0, create an empty Android project, such as the project name: SpeechRecogniTIonDemoJYJ.

1. First of all, you must register on the Keda Xunfei Open Platform (http://), or log in with a third party such as QQ.

2. Click on “Console†in the upper right corner of the homepage of the website to enter the console.

3. Create an application according to the instructions. The application name is called SpeechRecogniTIonDemoJYJ. After the creation is successful, there will be an AppID, which will be used when programming.

4. Click the "Open Service" button behind the SpeechRecogniTIonDemoJYJ to open the service - "Voice dictation, enter the voice dictation -" download the SDK corresponding to the current application.

5, download the Android version of the SDK, copy the Msc.jar and armeabi in the libs directory of the SDK package to the libs directory of the Android project (if the project has no libs directory, please create it yourself), and because you also need to use the voice dictation Dialog So, copy the iflytek folder in the assets directory of the SDK package to the assets directory of the project, as shown in the following figure. Also note that each different application must apply for a different AppID, and separately download the SDK corresponding to the different AppID, otherwise an error will occur.

6. For more detailed explanations and materials, please refer to the information library of Xunfei Open Platform (http://).

(2) Development

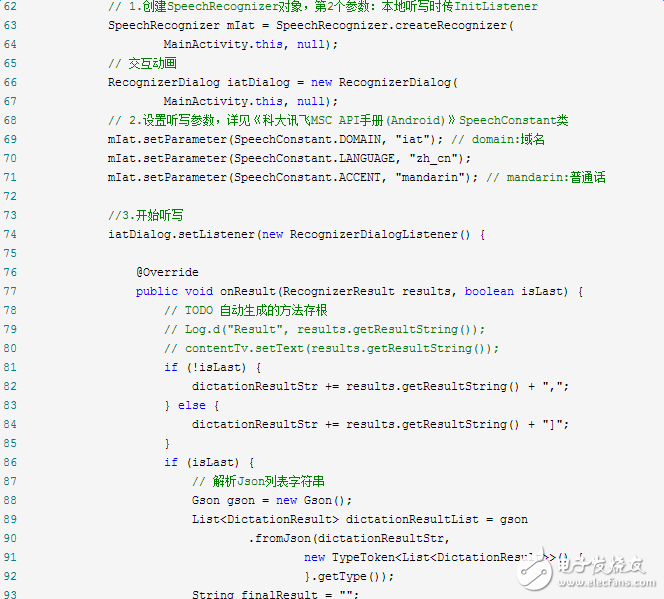

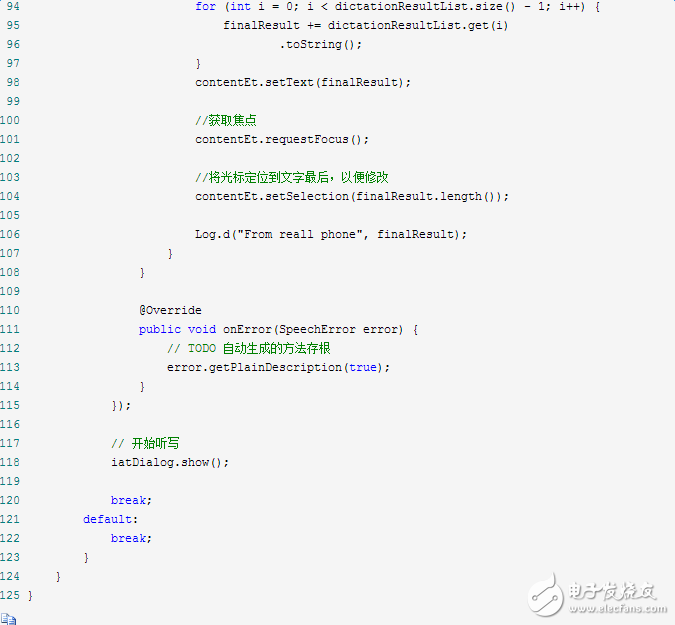

The function of Demo implementation is very simple, that is, click a button to pop up the speech recognition Dialog window, talk, and after clicking the Dialog window, the automatically recognized text result will be displayed in the EditText below. The result of the voice dictation returned by the server is Json format data, and finally the Json data is parsed (see my article in this article: parsing the Json format data with GSON) and parsing the speech string.

1, XML code:

There is a button in the interface, a TextView and an EditText, which is used to display the result of speech recognition.

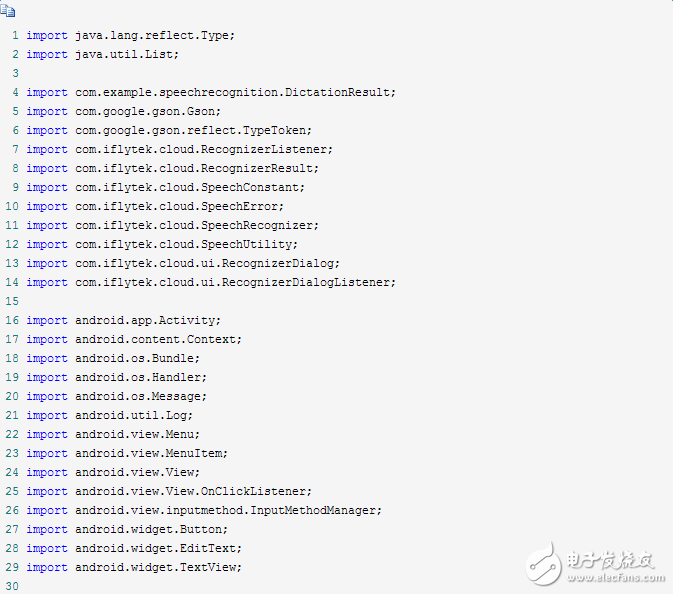

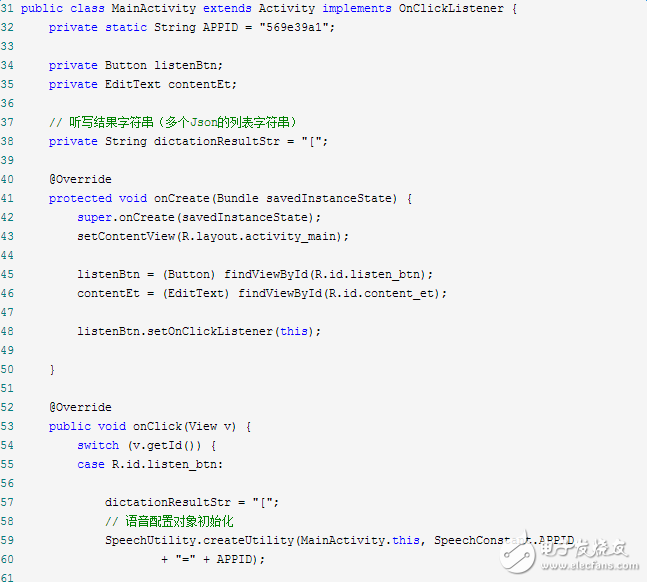

2, MainAcTIvity

Wuxi Lerin New Energy Technology Co.,Ltd. , https://www.lerin-tech.com