First, ELK construction articles

ELK is short for Elasticsearch, Logstash, and Kibana. These three are core suites, but not all.

Elasticsearch is a real-time full-text search and analysis engine that provides three functions for collecting, analyzing, and storing data. It is a set of open REST and JAVA API structures that provide efficient search functions and extendable distributed systems. It is built on top of the Apache Lucene search engine library.

Logstash is a tool for collecting, analyzing, and filtering logs. It supports almost any type of log, including system logs, error logs, and custom application logs. It can receive logs from many sources. These sources include syslog, messaging (such as RabbitMQ), and JMX, which can output data in a variety of ways, including e-mail, websockets, and Elasticsearch.

Kibana is a web-based graphical interface for searching, analyzing, and visualizing log data stored in Elasticsearch metrics. It leverages Elasticsearch's REST interface to retrieve data, allowing users not only to create custom dashboard views of their own data, but also allowing them to query and filter data in a special way.

surroundings

Centos6.5 Two IP:192.168.1.202 Installation: elasticsearch, logstash, Kibana, Nginx, Http, Redis 192.168.1.201 Installation: logstash

installation

Install the yum source key for elasticsearch (this needs to be configured on all servers) # rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch Configure yum source for elasticsearch # vim /etc/yum. Repos.d/elasticsearch.repo Add the following to the elasticsearch.repo file [elasticsearch-5.x] name=Elasticsearch repository for 5.x packagesbaseurl=https://artifacts.elastic.co/packages/5.x/yumgpgcheck =1gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearchenabled=1autorefresh=1type=rpm-md

Install elasticsearch environment

Install elasticsearch # yum install -y elasticsearch install java environment (java environment must be above 1.8 version) wget http://download.oracle.com/otn-pub/java/jdk/8u131-b11/d54c1d3a095b4ff2b6607d096fa80163/jdk-8u131- Linux-x64.rpmrpm -ivh jdk-8u131-linux-x64.rpm Verify that Java is installed successfully java -versionjava version "1.8.0_131"Java(TM) SE Runtime Environment (build 1.8.0_131-b11)Java HotSpot(TM) 64 -Bit Server VM (build 25.131-b11, mixed mode)

Create Elasticsearch data storage directory, and modify the directory belongs to the main group

# mkdir -p /data/es-data (Customized directory for storing data data) # chown -R elasticsearch:elasticsearch /data/es-data

Modify elasticsearch's log ownership group

# chown -R elasticsearch:elasticsearch /var/log/elasticsearch/

Modify the elasticsearch configuration file

# vim /etc/elasticsearch/elasticsearch.yml Find the cluster.name in the configuration file, open the configuration and set the cluster name cluster.name: demon to find the node.name in the configuration file, open the configuration and set the node name node.name : Elk-1 to modify the data path path.data: /data/es-data modify the logs log path.logs: /var/log/elasticsearch/ Configure memory to use the swap partition. bootstrap.memory_lock: true listening network address Network.host: 0.0.0.0 opens the listening port http.port: 9200 adds new parameters so that the head plugin can access es (5.x, if not manually) http.cors.enabled: truehttp.cors. Allow-origin: "*" starts the elasticsearch service

Start the service

/etc/init.d/elasticsearch startStarting elasticsearch: Java HotSpot(TM) 64-Bit Server VM warning: INFO: os::commit_memory(0x0000000085330000, 2060255232, 0) failed; error='Cannot allocate memory' (errno=12) ## There is insufficient memory for the Java Runtime Environment to continue.# Native memory allocation (mmap) failed to map 2060255232 bytes for committing reserved memory.# An error report file with more information is saved as:# /tmp/hs_err_pid2616.log [FAILED] This error is because the default memory size used is 2G. The virtual machine does not have as much space to modify the parameters: vim /etc/elasticsearch/jvm.options-Xms512m-Xmx512m Start /etc/init.d/elasticsearch start again. Service status, if there is an error, you can go to the error logless /var/log/elasticsearch/demon.log (the name of the log is named after the cluster name) to create a boot startup service # chkconfig elasticsearch on

Precautions

Need to modify a few parameters, otherwise the startup will give an error vim / etc / security / limits.conf at the end of the following content (elk for the boot user, of course, can also be specified as *) elk soft nofile 65536elk hard nofile 65536elk soft nproc 2048elk hard nproc 2048elk Soft memlock unlimitedelk hard memlock unlimited continue to modify a parameter vim /etc/security/limits.d/90-nproc.conf will be changed from 1024 to 2048 (ES minimum required 2048) * soft nproc 2048 also need to pay attention to a problem (In the log found the following, this will also lead to failure to start, this problem has been plagued for a long time) [2017-06-14T19:19:01,641][INFO ][oebBootstrapChecks ] [elk-1] bound or publishing to a non- Loopback or non-link-local address, enforcing bootstrap checks[2017-06-14T19:19:01,658][ERROR][oebBootstrap] [elk-1] node validation exception[1] bootstrap checks failed[1]: system call filters Failed to install; check the logs and fix your configuration or disable system call filters at your own risk Add a parameter to the file (I do not understand the effect of this parameter) vim /etc/elasticsearch/elasticsearch.yml bootstrap.system_call_filter: false

Request the next 9200 port through the browser to see if it is successful

First check whether the 9200 port up netstat -antp |grep 9200tcp 0 0 :::9200 :::* LISTEN 2934/java browser access test is normal (the following is normal) # curl http://127.0.0.1:9200/{ "name" : "linux-node1", "cluster_name" : "demon", "cluster_uuid" : "kM0GMFrsQ8K_cl5Fn7BF-g", "version" : { "number" : "5.4.0", "build_hash" : "780f8c4" , "build_date" : "2017-04-28T17:43:27.229Z", "build_snapshot" : false, "lucene_version" : "6.5.0" }, "tagline" : "You Know, for Search"}

How to interact with elasticsearch

JavaAPI RESTful API Javascript, .Net, PHP, Perl, Python View Status using API # curl -i -XGET 'localhost:9200/_count?pretty' HTTP/1.1 200 OK content-type: application/json; charset=UTF-8 content- Length: 95 { "count" : 0, "_shards" : { "total" : 0, "successful" : 0, "failed" : 0 } }

Install the plugin

Install the elasticsearch-head plugin to install the docker image or download the elasticsearch-head project via github. You can choose either 1 or 2 to install and use. 1. Use docker's integrated elasticsearch-head # docker run -p 9100:9100 mobz/elasticsearch-head:5 After the docker container is downloaded and started, run the browser and open http://localhost:9100/2. Install elasticsearch-head with git # yum install -y npm # git clone git:/ /github.com/mobz/elasticsearch-head.git # cd elasticsearch-head # npm install # npm run start Check if the port is up netstat -antp |grep 9100 Browser access test is normal http://IP:9100/

Use of LogStash

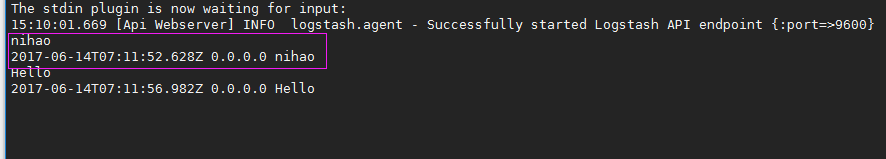

Install Logstash environment: official installation manual: https:// download yum source key authentication: # rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch install yum logstash# yum install -y logstash Look under the logstash installation directory # rpm-ql logstash create a soft connection, each time you execute the command do not have to write installation path (default installed in / usr / share) ln-s / usr / share / logstash / bin / Logstash /bin/ execute logstash command # logstash -e 'input { stdin { } } output { stdout {} }' After successful operation input: nihaostdout returns:

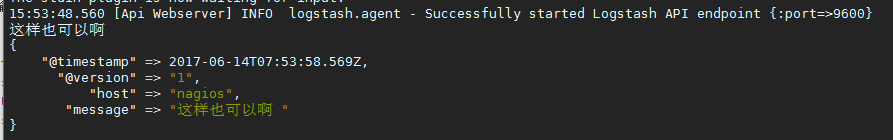

Note: -e performs input standard input {input} plugin output standard output {stdout} plugin outputs more detailed information via rubydebug # logstash -e 'input { stdin { } } output { stdout {codec => rubydebug} } 'Execution success input: Nihaostdout output results:

If both the standard output and elasticsearch need to retain how they should be played, see #/usr/share/logstash/bin/logstash -e 'input { stdin { } } output { elasticsearch { hosts => ["192.168.1.202:9200" "] } stdout { codec => rubydebug }}' After the success of the input: I am elk returned results (standard output):

Logstash use configuration file

Official Guide: https:// Create Configuration File 01-logstash.conf # Add the following to the vim /etc/logstash/conf.d/elk.conf file input { stdin { } }output { elasticsearch { hosts => ["192.168 .1.202:9200"] } stdout { codec => rubydebug }} Use configuration file to run logstash# logstash -f ./elk.conf after successful input and standard output

Logstash database type

1. Input plug-in definitive guide: https:// file plug-in usage # vim /etc/logstash/conf.d/elk.conf Add the following configuration input { file { path => "/var/log/messages" type => "system" start_position => "beginning" } } output {elasticsearch {hosts => ["192.168.1.202:9200"] index => "system-%{+YYYY.MM.dd}" } } Run logstash to specify elk. Conf configuration file, filter matches #logstash -f /etc/logstash/conf.d/elk.conf

To configure the security log and save the log index as a type, continue to edit the elk.conf file

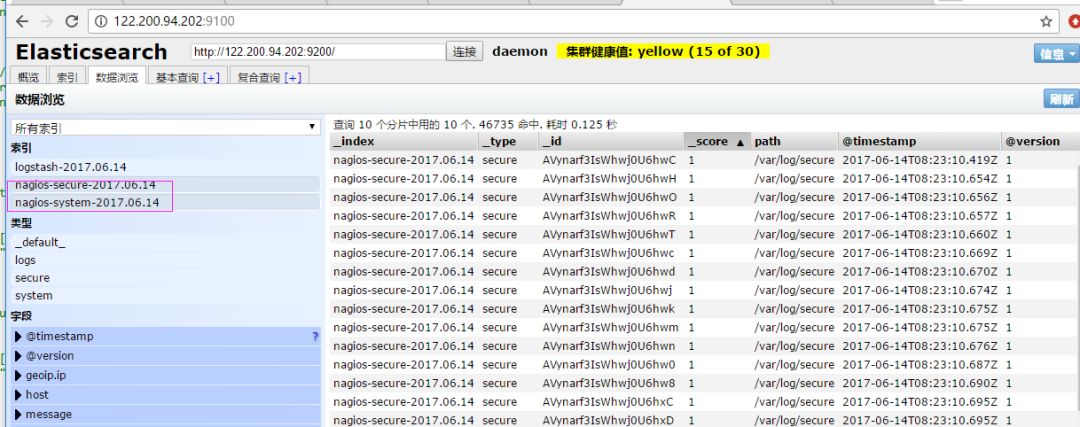

# vim /etc/logstash/conf.d/elk.conf Add secure log path input { file { path => "/var/log/messages" type => "system" start_position => "beginning" } file { path => "/var/log/secure" type => "secure" start_position => "beginning" }}output { if [type] == "system" { elasticsearch { hosts => ["192.168.1.202:9200"] Index => "nagios-system-%{+YYYY.MM.dd}" } } if [type] == "secure" { elasticsearch { hosts => ["192.168.1.202:9200"] index => "nagios- Secure-%{+YYYY.MM.dd}" } }} Run logstash Specify the elk.conf configuration file to filter matches # logstash -f ./elk.conf

After these settings are no problem, next install kibana, which can be displayed in the foreground

Kibana installation and use

Install Kibana environment official installation manual: https:// download kibana tar.gz package # wget https://artifacts.elastic.co/downloads/kibana/kibana-5.4.0-linux-x86_64.tar.gz extract Kibana's tarball # tar -xzf kibana-5.4.0-linux-x86_64.tar.gz into the unzipped kibana# mv kibana-5.4.0-linux-x86_64 /usr/local create kibana's soft connection # ln -s /usr/local/kibana-5.4.0-linux-x86_64/ /usr/local/kibana Edit the kibana configuration file # vim /usr/local/kibana/config/kibana.yml Modify the configuration file as follows, enable the following configuration server .port: 5601server.host: "0.0.0.0"elasticsearch.url: "http://192.168.1.202:9200"kibana.index: ".kibana" Install the screen so that kibana runs in the background (and you don't have to install it, of course) , start the background in other ways) # yum -y install screen# screen# /usr/local/kibana/bin/kibananetstat -antp |grep 5601tcp 0 0 0.0.0.0:5601 0.0.0.0:* LISTEN 17007/node Open browse And set the corresponding index http://IP:5601

Second, ELK combat articles

Well, now that the index can be created, you can now export the nginx, apache, message, and secrue logs to the front desk (if Nginx has it, modify it directly, without installing it yourself)

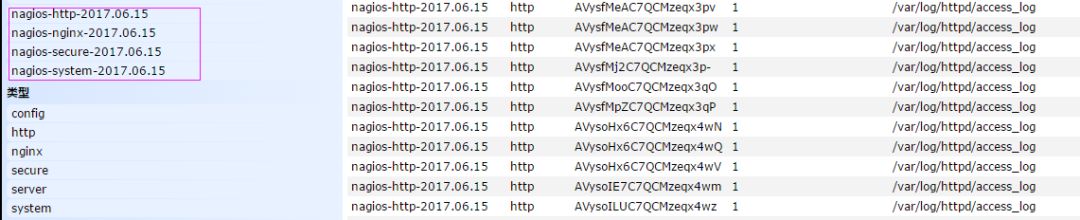

Edit the nginx configuration file, modify the following (added under the http module) log_format json '{"@timestamp":"$time_iso8601",' '"@version":"1",' '"client":"$remote_addr" ,''"url":"$uri",' '"status":"$status",' '"domian":"$host",' '"host":"$server_addr",' '"size" :"$body_bytes_sent",' '"responsetime":"$request_time",' '"referer":"$http_referer",' '"ua":"$http_user_agent"' '}'; Modifying the output format of access_log is just Define the json access_log logs/elk.access.log json; Continue to modify the apache configuration file LogFormat "{ \ \"@timestamp\": \"%{%Y-%m-%dT%H:%M:%S %z}t\", \ "@version\": \"1\", \"tags\":[\"apache\"], \"message\": \"%h %l % u %t \\\"%r\\\" %>s %b\", \ \"clientip\": \"%a\", \ \"duration\": %D, \ \"status\ ": %>s, \ \"request\": \"%U%q\", \"urlpath\": \"%U\", \"urlquery\": \"%q\", \"bytes\": %B, \"method\": \"%m\", \ "site\": \"%{Host}i\", \ \"referer\": \" %{Refere r}i\", \"useragent\": \"%{User-agent}i\" \ }" Like ls_apache_json, modify the output format to the json format defined above. CustomLog logs/access_log ls_apache_json Edit the logstash configuration file to make the log Collect vim /etc/logstash/conf.d/full.conf input { file { path => "/var/log/messages" type => "system" start_position => "beginning" } file { path => "/var /log/secure" type => "secure" start_position => "beginning" } file { path => "/var/log/httpd/access_log" type => "http" start_position => "beginning" } file { path = > "/usr/local/nginx/logs/elk.access.log" type => "nginx" start_position => "beginning" } } output { if [type] == "system" { elasticsearch { hosts => [" 192.168.1.202:9200"] index => "nagios-system-%{+YYYY.MM.dd}" } ] if [type] == "secure" { elasticsearch { hosts => ["192.168.1.202:9200" ] index => "nagios-secure-%{+YYYY.MM.dd}" } } if [type] == "http" { Elasticsearch { hosts => ["192.168.1.202:9200"] index => "nagios-http-%{+YYYY.MM.dd}" } } if [type] == "nginx" { elasticsearch { hosts => [ "192.168.1.202:9200"] index => "nagios-nginx-%{+YYYY.MM.dd}" } }}Run to see how it works logstash -f /etc/logstash/conf.d/full.conf

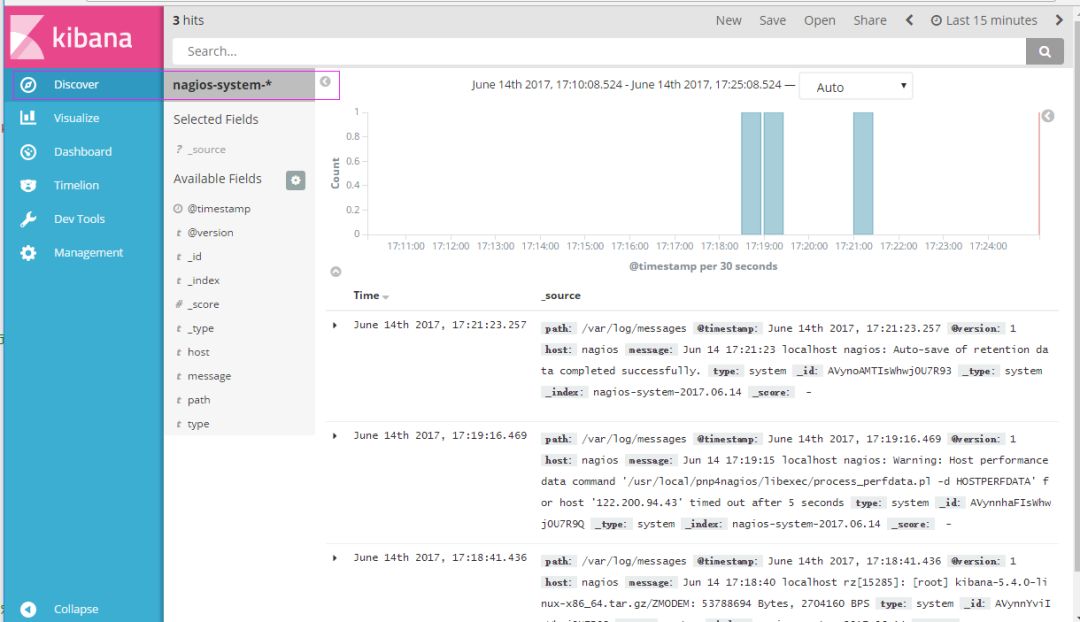

You can find all the indexes that create the log already exist, then go to Kibana to create a log index for display (in accordance with the above method to create an index), look at the effect of the show

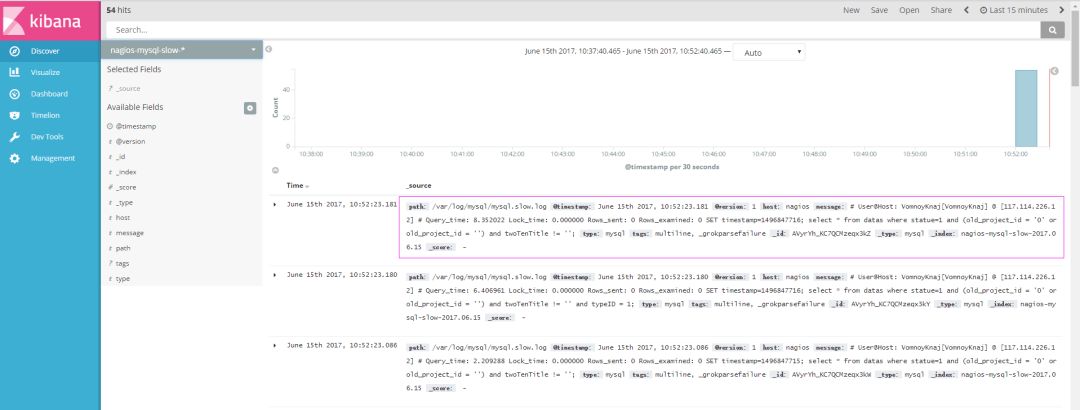

Next came the MySQL slow log display

Because MySQL's slow log query format is special, you need to use regular matching, and use multiline to perform multi-line matching (see the specific configuration) input {file {path => "/var/log/messages" type => "system " start_position => "beginning" } file { path => "/var/log/secure" type => "secure" start_position => "beginning" } file { path => "/var/log/httpd/access_log" type => "http" start_position => "beginning" } file { path => "/usr/local/nginx/logs/elk.access.log" type => "nginx" start_position => "beginning" } file { path = > "/var/log/mysql/mysql.slow.log" type => "mysql" start_position => "beginning" codec => multiline { pattern => "^# User@Host:" negate => true what => "previous" } }}filter { grok { match => { "message" => "SELECT SLEEP" } add_tag ​​=> [ "sleep_drop" ] tag_on_failure => [] } if "sleep_drop" in [tags] { drop {} } grok { matc h => { "message" => "(?m)^# User@Host: %{USER:User}\[[^\]]+\] @ (?: (?

See the effect (a slow log query will show one, if you do not make a regular match, then a line will show one)

Specific log output requirements for specific analysis

Three: ELK Ultimate

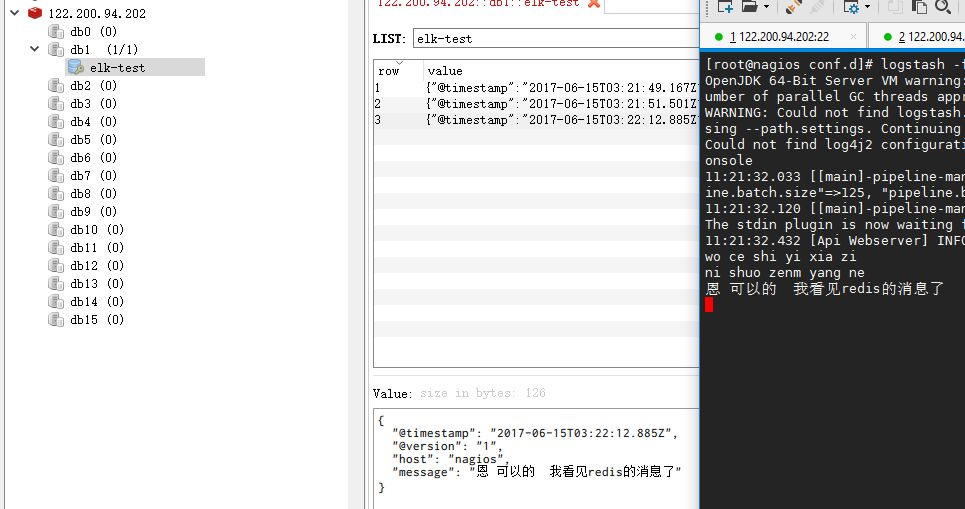

Install reids # yum install -y redis Modify the redis configuration file # vim /etc/redis.conf Modify the contents of the following daemonize yesbind 192.168.1.202 Start redis service # /etc/init.d/redis restart Test whether redis enabled successfully redis -cli -h 192.168.1.202 Input info If there is no error, redis 192.168.1.202:6379> inforedis_version:2.4.10.... Edit the configuration redis-out.conf configuration file and store the standard input data in redis # vim /etc/logstash/conf.d/redis-out.conf Add the following input { stdin {}}output {redis { host => "192.168.1.202" port => "6379" password => 'test' db => '1' data_type => "list" key => 'elk-test' }} Run logstash to specify the redis-out.conf configuration file # /usr/share/logstash/bin/logstash -f /etc/logstash/ Conf.d/redis-out.conf

After successful operation, input content in logstash (see the effect)

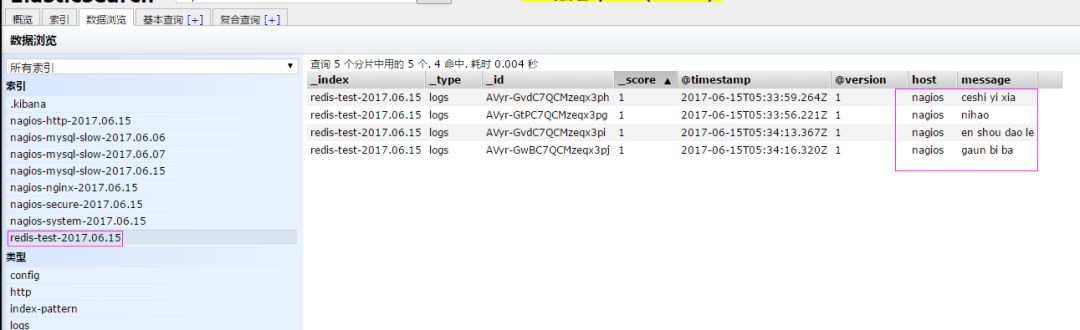

Edit the redis-in.conf configuration file and export the stored data of reids to elasticsearch. # vim /etc/logstash/conf.d/redis-out.conf Add the following input {redis { host => "192.168.1.202 "port => "6379" password => 'test' db => '1' data_type => "list" key => 'elk-test' batch_count => 1 # This value refers to when reading data from the queue. How many items are taken at a time, the default is 125 (If there are no 125 items in redis, an error will occur, so add this value during the test) }}output {elasticsearch {hosts => ['192.168.1.202:9200'] index = > 'redis-test-%{+YYYY.MM.dd}' }} Run logstash Specify the redis-in.conf configuration file # /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d /redis-out.conf

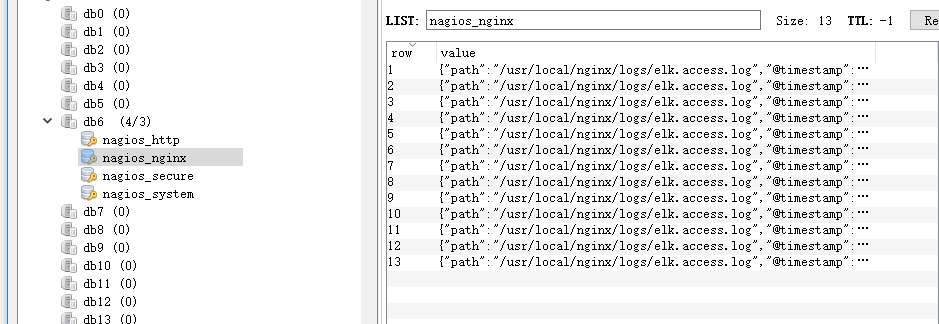

Change the previous configuration file to make all the source files for log monitoring stored in redis, and then change the output to elasticsearch via redis as follows, edit full.confinput { file { path => "/var/log /httpd/access_log" type => "http" start_position => "beginning" } file { path => "/usr/local/nginx/logs/elk.access.log" type => "nginx" start_position => "beginning "} file { path => "/var/log/secure" type => "secure" start_position => "beginning" } file { path => "/var/log/messages" type => "system" start_position => "beginning" }}output { if [type] == "http" { redis { host => "192.168.1.202" password => 'test' port => "6379" db => "6" data_type => "list " key => 'nagios_http' } } if [type] == "nginx" { redis { host => "192.168.1.202" password => 'test' port => "6379" db => "6" data_type => "list" key => 'nagios_nginx' } } if [type] == "secure" { redis { host => "192.168.1.202" password => 'test' port => "6379" db => "6" data_type => "list " key => 'nagios_secure' } } if [type] == "system" { redis { host => "192.168.1.202" password => 'test' port => "6379" db => "6" data_type => "list" key => 'nagios_system' } }} Run logstash Specify the configuration file for shipper.conf # /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/full.conf View in redis Whether the data has been written to it (sometimes the input log file does not generate a log, it will lead to redis not written to the log)

Read the redis data and write it to elasticsearch (need another host to do the experiment) Edit the configuration file # vim /etc/logstash/conf.d/redis-out.conf Add the following input {redis { type => "system" host => "192.168.1.202" password => 'test' port => "6379" db => "6" data_type => "list" key => 'nagios_system' batch_count => 1 } redis { Type => "http" host => "192.168.1.202" password => 'test' port => "6379" db => "6" data_type => "list" key => 'nagios_http' batch_count => 1 } redis {type => "nginx" host => "192.168.1.202" password => 'test' port => "6379" db => "6" data_type => "list" key => 'nagios_nginx' batch_count => 1 } Redis {type => "secure" host => "192.168.1.202" password => 'test' port => "6379" db => "6" data_type => "list" key => 'nagios_secure' batch_count => 1 }} output { if [type] == "system" { elasticsearch { Hosts => ["192.168.1.202:9200"] index => "nagios-system-%{+YYYY.MM.dd}" } } if [type] == "http" { elasticsearch { hosts => ["192.168 .1.202:9200"] index => "nagios-http-%{+YYYY.MM.dd}" } ] if [type] == "nginx" { elasticsearch { hosts => ["192.168.1.202:9200"] Index => "nagios-nginx-%{+YYYY.MM.dd}" } } if [type] == "secure" { elasticsearch { hosts => ["192.168.1.202:9200"] index => "nagios- Secure-%{+YYYY.MM.dd}" } } }Note: input is collected from the client output is also stored in the 192.168.1.202 elasticsearch, if you want to save to the current host, you can put output The hosts in the host are changed to localhost. If you still need to display kibana, you need to deploy kabana on the local machine. Why do you want to do this? It means a loosely coupled purpose. It means collecting logs on the client and writing to the server's redis. Inside the local redis, when the output is docked to the ES server to run the command to see the effect # /usr/share/logstash /bin/logstash -f /etc/logstash/conf.d/redis-out.conf

The effect is the same as the output directly to the ES server (this saves the log to the redis database and then removes the log from the redis database)

Online ELK

1. Log classification system log rsyslog logstash syslog plug-in access log nginx logstash codec json error log file logstash mulitline run log file logstash codec json device log syslog logstash syslog plug-in debug log file logstash json or mulitline 2. log normalization path fixed format try json3. System log start --> Error log --> Run log --> Access log

Because the ES saves the logs permanently, you need to periodically delete the logs. The following command deletes logs before the specified time.

Curl -X DELETE http://xx.xx.com:9200/logstash-*-`date +%Y-%m-%d -d "-$n days"`

Jiangsu Stark New Energy was founded in 2018. It is an emerging new energy manufacturer and trader. Off-grid inverters, as an indispensable part of off-grid solar systems, are part of our company`s efforts to test and promote. Through a large number of systematic tests, the main brands we sell are Growatt, Goodwe, Sofar solar,

In the process of our market development, we will gradually act as agents for more high-quality brands, so that customers can eliminate the worries about product selection. From our company can one-stop purchase to the most cost-effective off-grid inverter.

Off Grid Inverter,Off Grid Mppt Solar Inverter,Growatt Off Grid Inverter,3000W Pure Sine Wave Inverter

Jiangsu Stark New Energy Co.,Ltd , https://www.stark-newenergy.com